> FastAPI + scheduler = background tasks

How to integrate the scheduler library with FastAPI

In this blog post, we’ll explore how to integrate our open-source Python scheduler library with a FastAPI application. We’ll demonstrate this integration in the context of a hypothetical SaaS application called reminder_ai - a platform for scheduling AI-driven WhatsApp messages.

Another Scheduler for Python?

In 2021, we set out to create a scheduler library because we were dissatisfied with the ease of use of existing libraries. We wanted a tool that was simple, flexible, and aligned with our best practices. The scheduler library was the result of this effort, which has since become a valuable part of our toolset.

We’ve used the scheduler library in various projects, including scheduling tasks for incoming webhook events from the Stripe API, aggregating and updating data on a daily basis, and managing data collection for machine learning applications.

A practical FastAPI Use Case: Reminder AI

Let’s imagine a SaaS platform called Reminder AI, which allows users to schedule AI-driven reminder messages for WhatsApp groups and individual users. Each user has a daily quota to manage ChatGPT costs. In this scenario, the platform is used to send reminder messages for an upcoming event. We’ll cover the following features:

- Scheduling a health check on the platform

- Scheduling a daily quota reset

- Scheduling weekly reminder messages for a WhatsApp group

- Implementing a “remind me” feature that allows users to schedule a single message for a specific time

Install FastAPI & Scheduler

We assume you have uv installed on your system for environment and package management. Feel free to use pip or any other package manager.

uv init reminder_ai_project

cd reminder_ai_project

uv add fastapi scheduler uvicorn

uv pip install -e .You should now have a directory containing the following files:

tree --gitignore

.

├── main.py

├── pyproject.toml

├── README.md

└── uv.lockSetting Up the FastAPI Application

Lets now create the complete file structure required for the FastAPI application. Start by creating the directories reminder_ai, api and v1 and add the remaining (for now empty) files as listed below:

tree --gitignore

.

├── pyproject.toml

├── README.md

├── reminder_ai

│ ├── api

│ │ ├── __init__.py

│ │ └── v1

│ │ ├── __init__.py

│ │ ├── reminders.py

│ │ └── status.py

│ ├── __init__.py

│ ├── __main__.py

│ ├── main.py

│ ├── py.typed

│ └── scheduler.py

└── uv.lockLet’s start by defining the entrypoint of our application. Here we are going to serve the FastAPI app with the ASGI web server uvicorn:

# reminder_ai/__main__.py

import uvicorn

def main() -> None:

uvicorn.run(

"reminder_ai.main:app",

host="127.0.0.1",

port=8000,

log_level="info",

reload=True,

)

if __name__ == "__main__":

main()To successfully run the main function of our app, uvicorn needs to be able to find the reminder_ai.main:app entrypoint, which we set up below. Add the following imports to main.py at the root of your project:

# reminder_ai/main.py

import asyncio

import datetime as dt

import random

import signal

from contextlib import asynccontextmanager

from typing import Any, AsyncGenerator

from fastapi import FastAPI

from reminder_ai.api import router_api

from reminder_ai.scheduler import UTC, SchedulerWe’ll define the router_api, UTC and Scheduler objects shortly. We will do so in a moment. First define a callback, which we will use to stop the scheduler instance from processing further jobs, when the FastAPI application is being shut down:

# reminder_ai/main.py

def stop_scheduler(*args: Any) -> None:

Scheduler.stop_scheduler()Now we create asynchronous health check and token reset functions. The implementation below is just a dummy implementation that takes a little bit of time to process in order to simulate realistic workloads of such functionalities:

# reminder_ai/main.py

async def fake_health_check() -> None:

"""Simulate a health check with random success/failure."""

await asyncio.sleep(0.1) # simulate some work

status = "ok" if random.random() < 0.8 else "bad"

print(f"[{dt.datetime.now()}] Platform health check: {status}")

async def fake_reset_daily_token_quota() -> None:

"""Simulate a daily token quota reset."""

print(f"[{dt.datetime.now()}] Starting to reset daily token quotas for all users")

await asyncio.sleep(0.5) # simulate some work

print(f"[{dt.datetime.now()}] Successfully reset daily token quotas for all users")The given functions can now be scheduled within the FastAPI’s lifespan context.

# reminder_ai/main.py

@asynccontextmanager

async def lifespan(app: FastAPI) -> AsyncGenerator[None, None]:

Scheduler.start_scheduler()

signal.signal(signal.SIGINT, stop_scheduler)

Scheduler.schedule.cyclic(dt.timedelta(seconds=30), fake_health_check)

Scheduler.schedule.daily(

dt.time(hour=0, minute=0, second=0, tzinfo=UTC), fake_reset_daily_token_quota

)

yield

app = FastAPI(

title="Reminder AI",

lifespan=lifespan,

)

app.include_router(router=router_api, prefix="/api")Using signal.signal, we have now registered the stop_scheduler function to listen to the IPC signal SIGINT. This will interrupt the execution of currently scheduled jobs, for example when pressing <CTRL> + <C>.

We used Scheduler.start_scheduler, where Scheduler is a singleton which contains the actual scheduler.asyncio.Scheduler instance, we have named schedule to start the processing of jobs.

The Scheduler.schedule.cyclic function allows us to schedule a task to run repeatedly at a given interval, such as every 30 seconds. In our example, it’s used for the simulated health check, ensuring the system remains responsive. Meanwhile Scheduler.schedule.daily schedules a task to run once per day at a specific UTC time, such as midnight, which is ideal for resetting daily quotas.

Let’s now explore, how we can implement the Scheduler singleton in scheduler.py:

# reminder_ai/scheduler.py

import asyncio

import datetime as dt

from scheduler.asyncio import Scheduler as AioScheduler

UTC = dt.timezone.utc

class Scheduler:

is_running_event = asyncio.Event()

schedule: AioScheduler

@classmethod

def start_scheduler(cls) -> None:

cls.schedule = AioScheduler(tzinfo=UTC)

cls.is_running_event.set()

@classmethod

def stop_scheduler(cls) -> None:

cls.is_running_event.clear()

@classmethod

def is_running(cls) -> bool:

return cls.is_running_event.is_set()Note the is_running method, which provides a safe way to track the scheduler’s state via an asyncio.Event. This can be used in async loops to control execution and enable graceful exits.

Defining the tasks for the reminders API

Lets take care of the required imports and define the reminders route:

# reminder_ai/api/v1/reminders.py

import asyncio

import datetime as dt

from uuid import UUID

from fastapi import APIRouter

from fastapi.responses import PlainTextResponse

from scheduler.trigger import weekday as weekday_factory

from pydantic import BaseModel

from reminder_ai.scheduler import UTC, Scheduler

ROUTE_REMINDERS = "reminders"

router_reminders = APIRouter(tags=[ROUTE_REMINDERS])We can now define the fake_remind function, which simulates generating and sending reminder messages to users and groups:

# reminder_ai/api/v1/reminders.py

async def fake_remind(

*,

user_id: UUID | None = None,

group_id: UUID | None = None,

prompt: str,

) -> None:

t_generate = "[{entity}] Generate reminder message with prompt: {prompt}..."

t_remind = "[{entity}] Sending reminder message via WhatsApp API"

match user_id, group_id:

case UUID() as uuid, None:

print(t_generate.format(entity=f"User {uuid}", prompt=prompt[:20]))

await asyncio.sleep(4) # simulate request to llm api

print(t_remind.format(entity=f"User {uuid}"))

case None, UUID() as uuid:

print(t_generate.format(entity=f"Group {uuid}", prompt=prompt[:20]))

await asyncio.sleep(4) # simulate request to llm api

print(t_remind.format(entity=f"Group {uuid}"))

case _:

raise ValueError("Exactly one of user_id or group_id is required.")Here we have made use of python’s pattern matching feature, to handle both user and group reminders. We can now easily define the /users/{user_id} endpoint:

# reminder_ai/api/v1/reminders.py

class V1RemindersUsers_Post_Body(BaseModel):

prompt: str

schedule_time: dt.datetime | None = None # None means now

@router_reminders.post("/users/{user_id}")

async def _(*, user_id: UUID, body: V1RemindersUsers_Post_Body) -> PlainTextResponse:

Scheduler.schedule.once(

body.schedule_time or dt.timedelta(),

fake_remind,

kwargs={"user_id": user_id, "prompt": body.prompt},

)

return PlainTextResponse(content="OK")Scheduler.schedule.once can be used to schedule a job to run once at a specific time or after a certain delay. A timedelta of zero means the job will run as soon as the asyncio event loop allows it. Additionally we used the kwargs argument, to pass the user_id and prompt arguments to the fake_remind function.

Likewise we can define a tailored endpoint that allows us to send a reminder message to a targeted group on a weekly basis:

# reminder_ai/api/v1/reminders.py

class V1RemindersGroups_Post_Body(BaseModel):

prompt: str

weekday: int # 0: Monday, ..., 6: Sunday

n_weeks: int

@router_reminders.post("/groups/{group_id}")

async def _(

*,

group_id: UUID,

body: V1RemindersGroups_Post_Body,

) -> PlainTextResponse:

day_and_time = weekday_factory(body.weekday, dt.time(hour=9, minute=0, tzinfo=UTC))

Scheduler.schedule.weekly(

day_and_time,

fake_remind,

kwargs={"group_id": group_id, "prompt": body.prompt},

max_attempts=body.n_weeks,

)

return PlainTextResponse(content="OK")Here we use the scheduler.trigger.weekday function to define the day and time of the week at which the reminder should be sent. The max_attempts parameter limits the total amount of reminders this job can send.

Defining the status API for the Scheduler

The Scheduler object contains a state, that consists of a list of active jobs. The str inbuilt can be used to get a human readable string representation of the state of the scheduler in form of a table. This allows us to easily implement a status API:

from fastapi import APIRouter

from fastapi.responses import PlainTextResponse

from reminder_ai.scheduler import Scheduler

ROUTE_STATUS = "status"

router_status = APIRouter(tags=[ROUTE_STATUS])

@router_status.get("/scheduler")

async def _() -> PlainTextResponse:

return PlainTextResponse(content=str(Scheduler.schedule), status_code=200)Register FastAPI routes

FastAPI provides a simple hierarchical router system, to register the endpoints, we have defined in the sections above:

# reminder_ai/api/v1/__init__.py

from fastapi import APIRouter

from reminder_ai.api.v1.reminders import ROUTE_REMINDERS, router_reminders

from reminder_ai.api.v1.status import ROUTE_STATUS, router_status

router_v1 = APIRouter()

router_v1.include_router(router_reminders, prefix=f"/{ROUTE_REMINDERS}")

router_v1.include_router(router_status, prefix=f"/{ROUTE_STATUS}")The FastAPI Application in Action

We have now implemented a basic application with multiple endpoints interfacing with the scheduler. The application can be run locally and accessed via http requests. To start the application, run the following command:

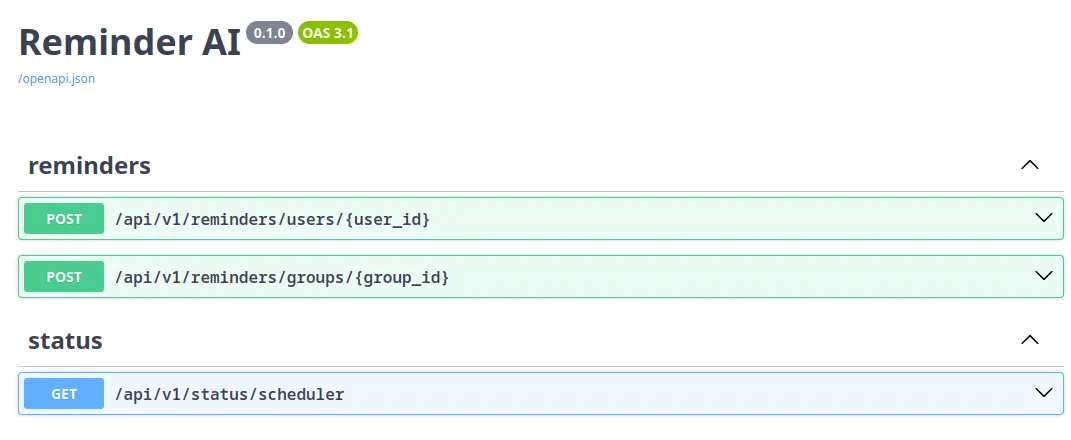

uv run python -m reminder_aiBy default, FastAPI serves a Swagger UI at http://127.0.0.1:8000/docs, which provides an interactive interface for testing API endpoints.

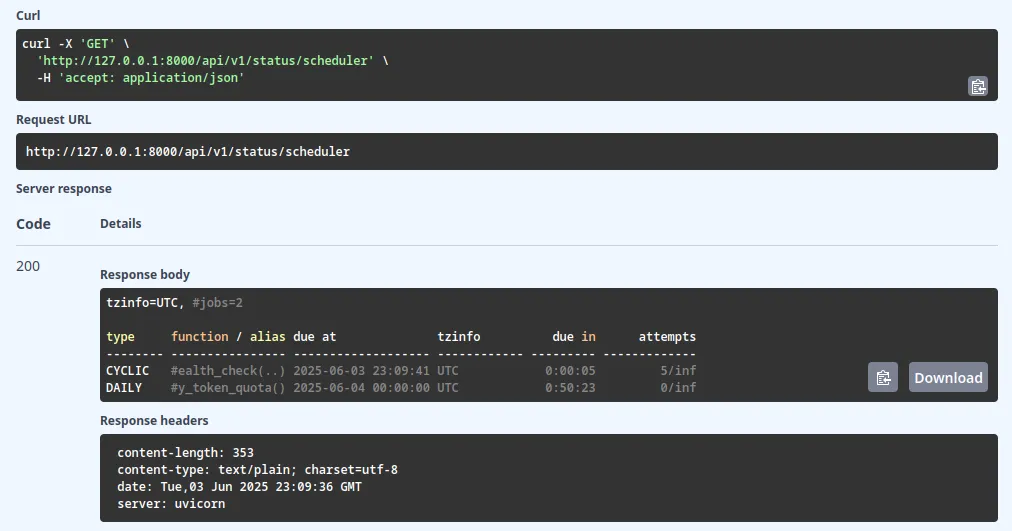

Let us first see what the /status endpoint does and if the scheduler is running as expected. For this click on the Accordion with the /api/v1/status/scheduler label, then find and click on the Try it out button. This will show the Execute button. Clicking it sends a GET request and displays the response. If everything is working as expected, you should see a response similar to the following:

The interface additionally provides a curl command, that you can use to test the endpoint from the command line. Together with watch, you can monitor the state of the scheduler in real-time:

watch -n 1 curl -s http://localhost:8000/api/v1/status/schedulerWith the -n 1 option, watch will refresh the output every second. Now we can directly observe, what the scheduler is doing when we interact with the other endpoints.

Let us schedule a single reminder for the user with id 07861d07-7f90-4f3d-bfbc-8b4d44d1679e at the provided UTC time 2025-06-06T11:30:00.000Z:

curl -X 'POST' \

'http://127.0.0.1:8000/api/v1/reminders/users/07861d07-7f90-4f3d-bfbc-8b4d44d1679e' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{

"prompt": "Write user X a nice reminder to register for the upcoming event!",

"schedule_time": "2025-06-06T11:30:00.000Z"

}'Likewise schedule a group reminder for the group with id 8e4f7f3d-3680-4f7b-b915-dc0452a330d6, that reminds the group members for the upcoming event every Wednesday for the next 3 weeks.

curl -X 'POST' \

'http://127.0.0.1:8000/api/v1/reminders/groups/8e4f7f3d-3680-4f7b-b915-dc0452a330d6' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{

"prompt": "Write a nice reminder for the python WhatsApp group to register for the upcoming event!",

"weekday": 2,

"n_weeks": 3

}'You should be able to see in real-time, how the scheduler is handling the job and updating the status.

Additional Scheduler Features

While this tutorial covers many of the scheduler’s key features, there are several other capabilities worth exploring:

- Job threading

- Scheduling with timezones

- Job prioritization

- Job tagging

- Job batching

- Scheduler & job meta data

Recap

We’ve explored how to integrate the Python scheduler with a FastAPI application and demonstrated how to schedule various types of jobs, including:

- Regular health checks

- Daily quota resets

- Weekly reminders

- One-time reminders

The full source code is available on GitHub. Let us know what kind of content you’d like to see next. We look forward to your feedback and suggestions, you can reach out to us by opening an issue on the GitHub repository or by sending an email.